After our previous post that broke down the process of setting up and deploying to Samsung’s GearVR, we took on the challenge of converting one of our in-house DK2 demos to work with it. It wasn’t simply a matter of plug-and-play with the Unity game engine; in fact, we had to completely re-think the way in which we’ve optimized 3D geometry in the past due to the differences between the PC and mobile platforms, such as the importance of draw calls. We also had to pay close attention to polycount because of the relatively low limit on mobile hardware. Last but not least, one of the key factors of a good VR demo is an intuitive input method, so we experimented with the Gear’s touchpad until we found a solid solution. The model we worked with was a simple solar-powered house design that was originally modeled in Revit and then brought into 3DS Max.

Draw Calls

Draw calls basically represent the number of times that the engine has to ask the GPU to draw objects on the screen. These are especially crucial for mobile development, where number of draw calls can be a major bottleneck. In order to optimize the performance of our scene on the GearVR, we had to cut on the number draw calls by combining (or “batching”) all of the objects that share the same material.

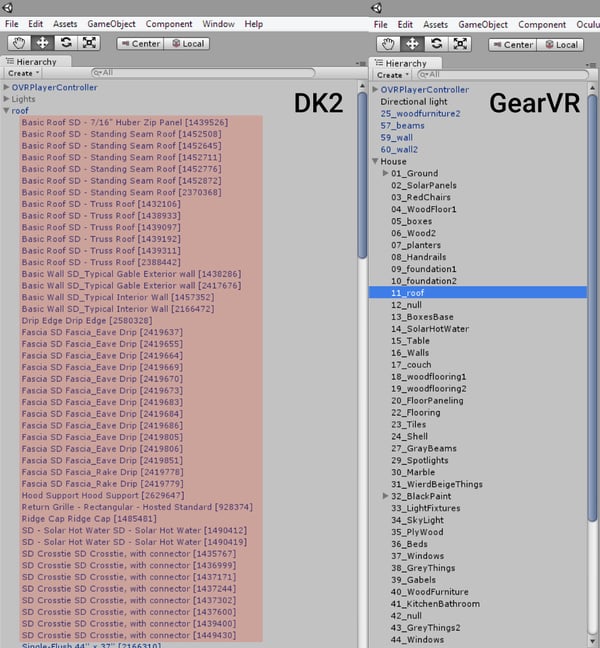

To do this, we imported the model into 3DS Max and collapsed all the objects that shared the same material by selecting them and selecting Collapse in the Utilities menu. This process can take a long time depending on how big the model is and the number of materials in it. With objects that have different materials on different faces, it gets tricky, so in some cases we had to explode the objects and group surfaces that share same materials, and then collapse them. With larger files, it’s better to divide the model into rooms and then batch the objects that share the same materials within those rooms. For example: the image below shows how we have collapsed all objects in the roof into one object for the GearVR project.

Note: During the collapse process, make sure to have Boolean options unchecked because it can potentially corrupt the geometry and tangents of the objects you are collapsing.

Polycount

Polycount is the number of polygons that make up each 3D object, and it’s an important factor to consider in any 3D project. With the GearVR, we were even more restrained than usual due to the limited power of the Galaxy Note 4. A good way to mitigate a polycount bottleneck is to figure out which objects in the scene have the highest polycount and optimize them by cutting down unneeded vertices. In 3DS Max, you can open the Manage Layers menu and add Faces to the columns to arrange the list from highest to lowest polycount and optimize or remove the objects with the greatest number of polygons.

In 3DS Max, this is as simple as selecting Pro Optimizer from the modifier list and selecting the percentage by which you want to optimize; usually it’s a matter of finding a sweet spot that reduces polycount while retaining a high fidelity.

Navigation

Because several shipments of the GearVR came without a bluetooth controller, we decided to find a method of navigating a demo that didn’t require any external controller whatsoever. This means we were limited to the two input sources that are on the GearVR itself: the back button and the touchpad.

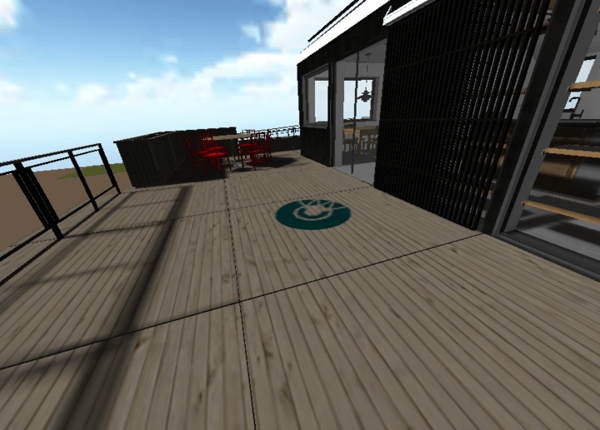

While this seemed daunting at first, the freedom that comes with a purely wireless headset let us utilize full 360-degree head tracking to its full potential. Our final input system involved a “touch and go” mechanic where the user would look wherever they wanted to walk and then tap once on the touchpad. By casting a ray at that instant from the center of the user’s vision to wherever they were looking and confining movement to the trajectory of that ray, we found that testers were able to move intuitively and precisely. We enhanced the precision further by drawing a “cursor” at the point where the invisible ray hits their currently observed target, so they could see where they would end up before tapping to move there.

.png?width=212&name=Prospect%20by%20IrisVR%20Black%20(1).png)