A.T. Chadwick is a mechanical contracting firm serving the Delaware Valley area. The firm has played a role in the construction of many of the Philadelphia skyline’s most iconic buildings. Today, the company continues in that tradition while foregrounding new, modern construction practices.

Topics: VR Case Studies, VR collaboration, Architecture Engineering Construction, AEC

For Security and Scalability, Check Out the New Meta Quest for Business (Beta)

The new Meta Quest for Business (beta) is now available for U.S.-based businesses and employees. According to Meta, the subscription service (currently free during beta) makes it easy to deploy, manage, and scale Meta Quest headsets within your company. This is great news for architecture, engineering, and construction (AEC) firms seeking to add or grow VR usage within their teams.

In past iterations of this program, our AEC customers have shared concerns about enterprise-level support, data privacy and security, and the options to have custom applications to support business needs. With this new program, Meta aims to address these issues and more.

Topics: VR collaboration, Architecture Engineering Construction, Meta Quest for Business

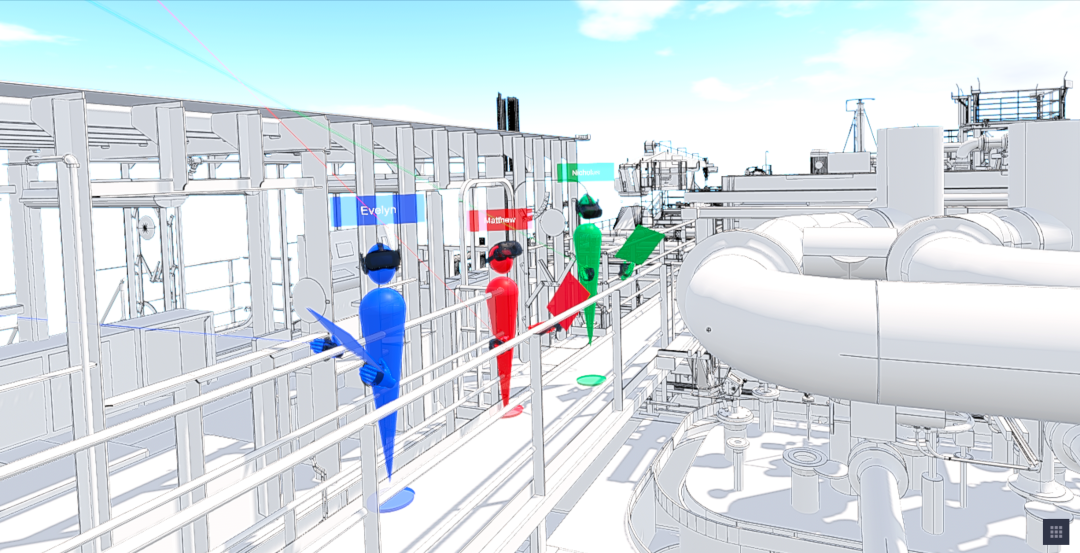

3 Ways to Use VR for Meetings in Architecture, Engineering and Construction

The architecture, engineering, and construction (AEC) industry is faced with a new reality of work, with the trend of remote and hybrid work showing no end in sight. Still, facilitating genuine and meaningful interaction among peers and clients is paramount for establishing culture in today’s work environment.

Topics: VR collaboration, VR meetings, Architecture Engineering Construction

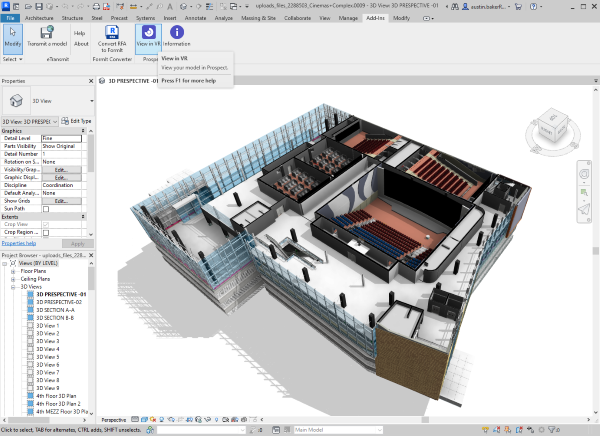

Revit to VR: How to Run True-to-Scale BIM Design Review Meetings

Did you know that virtual reality (VR) can take Revit workflows to the next level by enabling true-to-scale design review and collaboration in unbuilt environments?

Topics: VR collaboration, Revit to Virtual Reality

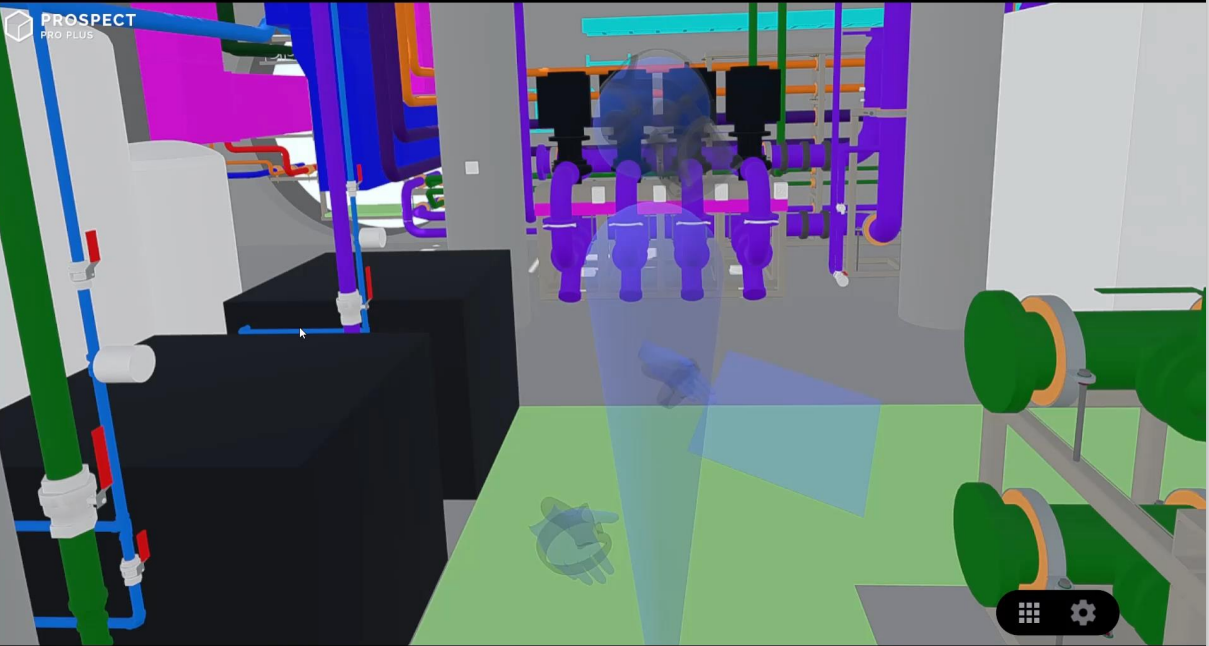

Based in Northern Ireland, Kane, is a leading mechanical, electrical, and plumbing (MEP) contractor that specializes in public health, prefabrication and fit-out projects. Kane delivers projects within the residential, hospitality, education, commercial and healthcare sectors.UsingProspect by IrisVR,Kane coordinated and visualized one of their most unique projects—Claridge’s Hotel, London. The team completed a full MEP installation including design and prefabrication of a £12 million energy center 5 floors below ground level.

Topics: VR Case Studies

Learn How a Specialty Engineering Firm Uses VR For Collaboration

Schnabel Engineering, Glen Allen, VA, is a specialty civil engineering consultant that specializes in geotechnical, dams and levees, and tunnel engineering services. This $90M+/year firm focuses on using technology in innovative ways to increase efficiency, reduce risk, and enhance the client experience.

Topics: VR Case Studies

We are thrilled to announce that IrisVR is joining forces with Autodesk. It wasn’t that long ago that IrisVR joined The Wild, united by a shared vision of providing immersive collaboration tools to the architecture, engineering, and construction (AEC) industry to drive efficiency and deliver value to our users.

Human-Centered Design: How to Empower Stakeholders with VR Pre-Occupancy Evaluations

This is the fourth and final post in a series about tech-enabled pre-occupancy evaluations, which are opening up a new world of possibilities for AEC. To read more from this series, see our previous 3 posts on how pre-occupancy evaluations can drive higher quality project delivery, reduce waste and rework, and take internal design processes to the next level.

Topics: VR collaboration, human-centered design

How BIM Teams Can Unlock Full-Lifecycle Coordination with Prospect’s VR Issue Tracking

Costly Coordination Errors Demand a Digital Transformation

On average, construction job site errors waste $1 trillion each year. Trying to interpret final 2D construction documents on site of increasingly complex Revit or Navisworks models leads to an abundance of errors, costly rework, and delays.

.png?width=212&name=Prospect%20by%20IrisVR%20Black%20(1).png)